I created a simple AI system to create images of handwritten numbers. This is a relatively simple example but something I can build on when I have time to create more sophisticated images.

Here I have broken down the concept and hopefully given a simplified explanation. For those more technical, this explanation isn’t aimed at you.

To get us started, let’s admire these two images created by Bing Create using the prompts:

- a sunny day with hills and a lake

- a sunny day with hills and a lake and a killer robot

How does AI make images?

One of the most popular techniques for creating images using AI is something called Generative Adversarial Networks, more commonly referred to as GANs.

GANs are a type of artificial neural network.

Primarily, regular neural networks are trained using data where they are given some data and the outcome. For example, when I was going my PhD I would train them with medical data for a patient along with the outcome (did they die? did they come back into the hospital?). I would need to give it hundreds, sometimes thousands, of patient data. The neural network would try to learn the patterns between the medical data and the outcomes, therefore developing the ability to predict the outcome (death/readmission) based on new patient data. When we use both the input and output data we refer to this process as ‘supervised learning’.

GANs are different.

Firstly, they are not a single neural network, instead, they are 2 neural networks. Secondly, they are unsupervised – meaning they don’t know the outcome.

These two neural networks are called a Discriminator and a Generator.

So how do they work? (the non-technical explanation)

The Discriminator and the Generator are both trained at the very same time.

Using the handwritten numbers as an example…

We pass some examples of real handwritten images to the Discriminator model, which tries to learn the patterns.

At the same time, we feed in some random noise into the Generator which will try to turn that into a new image.

This new image is then passed through the Discriminator which essentially decides if the new image is what it would expect to see (from the patterns it had seen before). For example “does this new image look like a handwritten number?”.

It is not saying – “does this new image look like a number 8 or a number 9” – it is simply – “does this look like a number” or perhaps a better way of explaining it is “I’ve seen a lot of images, is this the same type of image I’m used to seeing?”

It’s a little like the hot and cold game. “You’re getting hot (closer)” or “you’re getting colder”.

The Generator uses this feedback to learn and try again, generating another image. Repeating the process over and over again.

Example

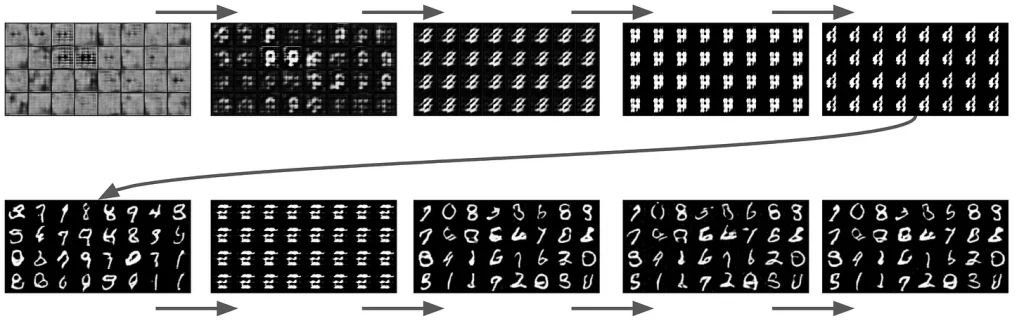

Using the one I built, below we can see how the AI system learned and improved over time.

Here it creates 32 handwritten numbers shown in a 8 x 4 plot.

In the first image (top left), each of the 32 images are just pure random noise. All of those images get shown to the Discriminator which has just started learning what real numbers look like and it says “those images you showed me look rubbish”. The AI system says they ‘look rubbish’ by giving them a bad score.

The Generator uses this bad score to update its internal workings and tries again (image 2).

Over time the system starts to generate images which look like real numbers. In this example, they look pretty good by the time we get to the 6th attempt.

The 7th image shows it got it wrong again, sometimes this happens. But given enough training time it gets back on track (see images 8 – 10).

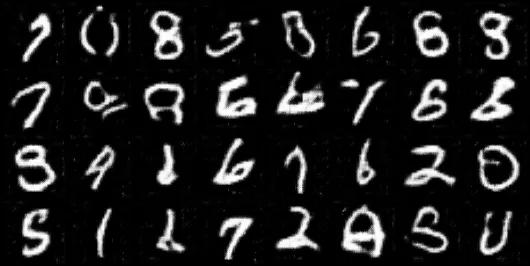

After training for 10 cycles (called epochs in machine learning) we can see some numbers. As shown in the image below they are not perfect, but I can see a 1, 2, 5, 6, 7, maybe 8 and a 0.

Now my Generator is trained I can ask it to generate as many new images as I want.

So what does this have to do with art?

So there isn’t much difference between training a GAN to create handwritten numbers and training a GAN to create artwork.

However, these machine-learning approaches take a long time to train, so we often test the concept on smaller problems first. This handwritten example took 10 minutes to train on a 3060Ti graphics card. Training on a CPU would have taken significantly longer.

But using the same approach you can simply change the training dataset and create a whole bunch of new images.

Below is a very hi-res example from a GAN trained with the celebrity faces dataset [source]. Here it has created images of new faces.

And below is an example of a GAN trained with the work of Vincent van Gogh [source].

If you want to read a more technical understanding of GANs, check out this paper: Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks (arxiv.org)

Summary

So, I created a GAN using Pytorch and trained it on handwritten number images. After 10 minutes of training, it could produce reasonable new handwritten numbers.

GANs have two neural networks, a Discriminator and a Generator. The Generator tries to make an image and the Discriminator decides if it was good or not.

GANs can be trained on any image data to create new images in the style of the images it was trained on. They are not copies but are instead close approximations